- Overview

- Problem Summary

- Scenario Background

- Join Types Background

- Example Data

- Example Join

- Summary of Test Results

- Key Findings of Tests

- What Changes are Needed in Power BI and Snowflake

- Power BI against Snowflake Tests

- Back to the Real World

- SQL Server SQL Tests

- Revisiting Snowflake

- Appendix: SQL Server Data Generation Process

Overview

This page starts with a summary of the problem then walks through some examples in Snowflake and SQL Server.

A quick note about downloads

For some reason, clicking the download links on this page doesn’t seem to download the files, but copying the links and pasting them into a new tab in Chrome does seem to work.

Problem Summary

There are two parts to this problem.

Problem Part 1

Power BI generates SQL that performs very poorly against Snowflake (and possibly other database platforms) when joining nullable columns, e.g. in the “Merge Queries” action in the Power BI query editor.

Specifically, the SQL generated prevents the use of the “hash join” physical join algorithm that has been one of the main algorithms used for decades by database engines when executing joins between large tables. This forces database engines to fall-back to using very inefficient physical joins (e.g. loop/cartesian join) that can perform orders of magnitude slower.

The reason why Power BI generates different SQL for non-nullable vs. nullable columns in a join is explained in the “Problem Part 2” section below.

Join Created By Power BI for non-nullable columns against Snowflake and SQL Server

X INNER JOIN Y

ON X.a = Y.bJoin Created By Power BI for nullable columns against Snowflake

X INNER JOIN Y

ON (X.a = Y.b and X.a IS NOT NULL and Y.b IS NOT NULL) OR (X.a IS NULL AND Y.b IS NULL)Not only is this more convoluted than the SQL Server equivalent, it is particularly ugly SQL because Snowflake has a built-in function that expresses the intent much more clearly:

X INNER JOIN Y

ON EQUAL_NULL(X.a, Y.b)Join Created By Power BI for nullable columns against SQL Server

X INNER JOIN Y

ON (X.a = Y.b) OR (X.a IS NULL AND Y.b IS NULL)Full query examples are included later on this page.

Problem Part 2

On a more philosophical level, Power BI is matching null values just like any other value. This behaviour is very ill-advised in my opinion. NULL represents “no information”. It should not be treated as just another value, at least not without an option to control this behaviour.

For example, in a customer dimension, if there is a CustomerAlternateKey (as used in the example below), then it would be stupid to say all customers with a NULL CustomerAlternateKey are the same customer – and yet, in effect, because Power BI joins null values just like any other value, this is what it is doing. So both erroneous cross-matches and duplication will likely arise.

For example, consider the following simple customer and sales data:

If these sets of data were joined in SQL via inner join, the null values in the customer alternate key column and sale key column would not match each other, resulting in:

However, when these sets of data are joined via the “Merge Queries” inner join action in Power BI the resulting data is:

Note that Power BI has treated null like any other value and the join has duplicated sales. For analysts used to the behaviour of SQL, this is pretty wild behaviour.

A sample Power BI PBIX file containing the simple example above can be downloaded from here.

Scenario Background

We’ve recently moved to using Snowflake as our DW backend platform. It’s a fantastic modern machine, generally speaking able to easily process queries across large datasets – from performance, SQL-compatibility and cost perspectives. So we were puzzled when we saw a seemingly simple query generated by Power BI that was taking an extremely long time to execute (and on Snowflake, time=cost).

When I looked into the problem, I admit I was surprised at the cause of the problem and the rather naïve SQL generated by Power BI that seems to ignore how actual database engines in the real-world execute joins (specifically, they greatly prefer simple equality joins).

I’ve also recreated the scenario in both Snowflake and SQL Server using some sample data (based on AdventureWorksDW) for a couple of reasons:

- I can’t share the original code since it is part of our internal DW – so using sample data is a simple alternative.

- There are some commonalities and some differences in the behaviour of Power BI between the two platforms – but the behaviour in both is problematic.

- The query plans in SQL Server are a bit richer (or at least I am more familiar with them) so are a helpful way to investigate the problem.

- To show that this issue affects more than Snowflake (my hunch is most/all major DB platforms would be affected).

- So that this blog post can hopefully be used to establish a conversation with Microsoft on the issue.

Join Types Background

Before we get into the detail, I am conscious some people may not understand what we mean by logical vs. physical join types, so here is a really quick intro.

Logical Joins

When most people talk about join types, they talk about inner, left outer, right outer, full outer, etc. These are logical joins, i.e. they describe functionally how data rows from two tables should be joined together. What they don’t describe is exactly how the database engine will execute the join internally.

Physical Joins

Physical joins describe the algorithm that a database engine will use to perform a logical join. So for example, let’s take an inner join… there are actually several different algorithms that SQL Server (or any database platform) could use to actually do the join. The end results will be the same* whichever algorithm is chosen, but each possible algorithm that could be used has different pros and cons. Some work faster for smaller row counts, some need sorted data, some require more memory to execute than others, etc. It is the job of the query optimiser inside the database engine to choose which physical join algorithm to use based on expected row counts and other factors.

(* well, not exactly the same – the resulting order of the data may be different for example).

It is generally always best to let the database engine choose which join algorithm to use. It is however possible to use query hints to control some aspects of the physical join in SQL Server, which is what we will do on this page to explore this problem.

For more information on logical and physical join types, see here.

Example Data

The scenario is that, as an analyst, we have some alternative sales data that is not yet in the star schema but is in a separate table named AlternativeSales. This data however joins to the DimCustomer table from the star schema via the CustomerAlternateKey column that is present in both tables.

The AlternativeSales table contains around 3.8 million rows and the DimCustomer table contains around 18 thousand rows.

The key things to note are that the CustomerAlternateKey is nullable and a small percentage of the rows in both tables have NULL values in the CustomerAlternateKey column.

If you are interested in how the data was produced, it is detailed in the appendix at the bottom on this page.

But all key columns should be “NOT NULL” in a star schema…

This point is bound to come up at some point, so let’s get it out of the way here. In general I agree with this, but…

Whether we like it or not, the fact is Power BI is used in a range of scenarios today and analysts in the real-world are typically working with data from multiple/different sources – so in my view it’s just not credible to assume everyone will always be working only with well organised star schemas with well managed keys.

The data used in real-world analysis is often not just a simple star schema and analysts will often join data in ways that were not anticipated at design-time. (And we all know comparatively how long it takes to build star schemas, right?). I say all of this after 20 plus years of seeing how real-world teams work.

Power BI will always work best with star schemas, but I think it is wrong if very core Power BI functionality (like “Merge Queries”) only works in practice with pure star schemas.

Example Join

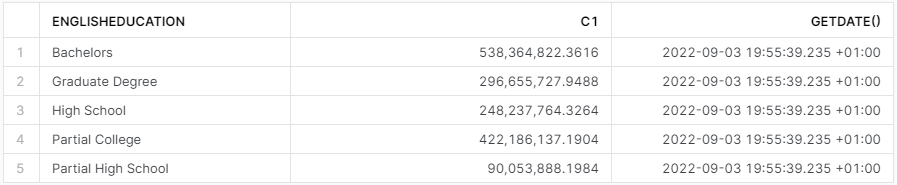

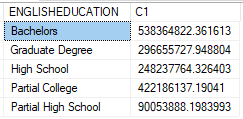

For discussion purposes, we will join the two tables to calculate the total sales amount by customer level of education (the field named EnglishEducation in DimCustomer). Writing this join in SQL, it would be:

SELECT c.EnglishEducation, SUM(s.SalesAmount) SalesAmount

FROM PbiJoinProblem.DimCustomer c

INNER JOIN PbiJoinProblem.AlternativeSales s

ON c.CustomerAlternateKey = s.CustomerAlternateKey

GROUP BY c.EnglishEducation

ORDER BY 1Output:

| EnglishEducation | SalesAmount | – | – | – | – |

| Bachelors | 538,364,822.3616 | ||||

| Graduate Degree | 296,655,727.9488 | ||||

| High School | 248,237,764.3264 | ||||

| Partial College | 422,186,137.1904 | ||||

| Partial High School | 90,053,888.1984 |

Note that the customers and sales with NULL CustomerAlternateKey values have been eliminated.

Summary of Test Results

There are lots of different tests below. This section provides a quick reference summary of the test results below:

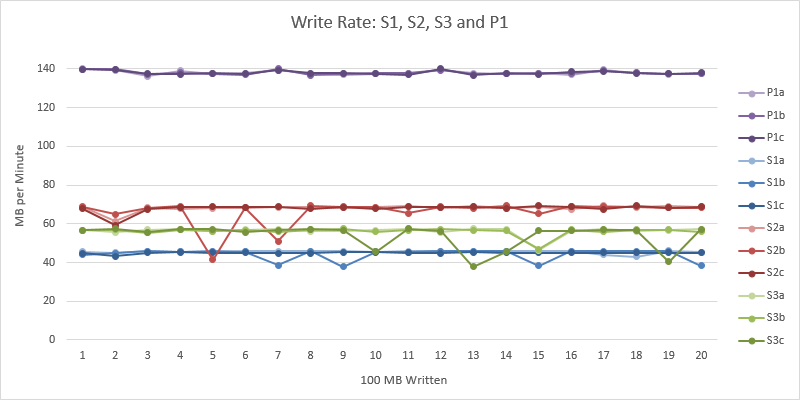

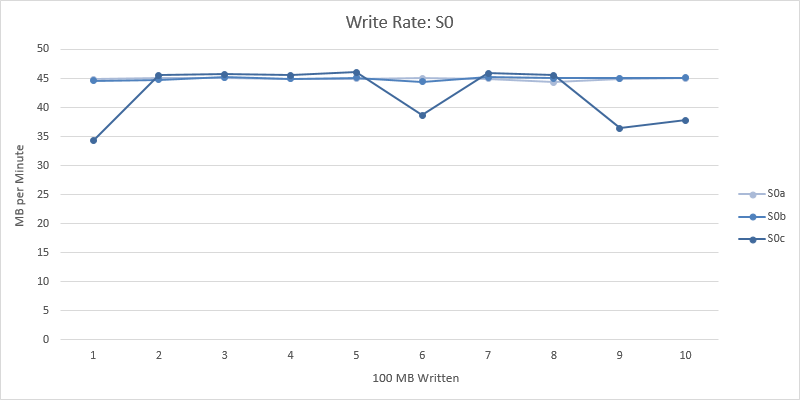

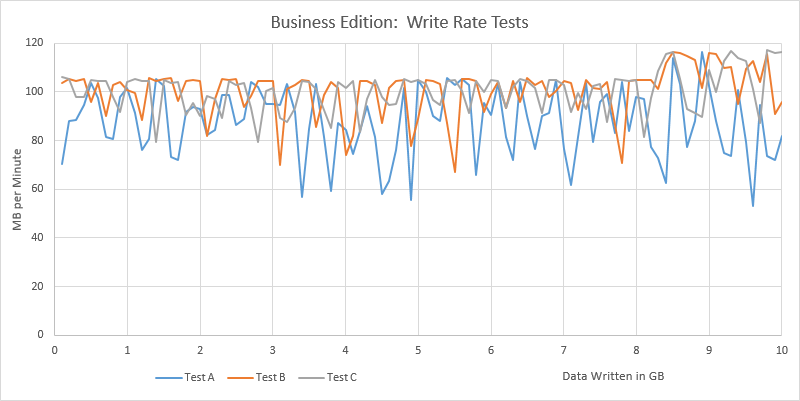

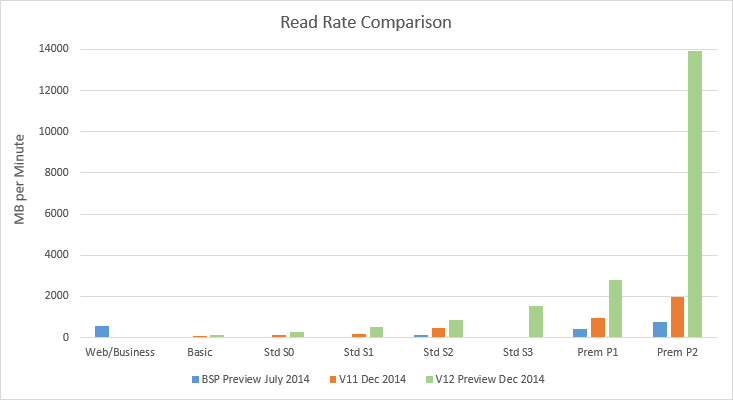

Power BI against Snowflake Test Results

With the exception of Tests 1b and 4, these tests were carried out in Power BI Desktop against Snowflake. Tests 1b and 4 were run in the Worksheets (query editor) in the Snowflake Snowsight web UI.

| Test # | Test Description | Query Time | Duplicates |

| 1a | Power BI joining two tables with nullable columns | 9 min 38 sec | Yes |

| 1b | As 1a but simple SQL join criteria | 1 sec | No |

| 2 | As test 1 filtering but filtering out NULL values from join columns | 5 min 37 sec | No |

| 3 | As test 1 but no null key values in database tables | 2 sec | No |

| 4 | Direct SQL execution using simpler SQL join query that Power BI Desktop generated for SQL Server | 54 sec | Yes |

Snowflake SQL against SQL Server Test Results

These tests were taking the SQL generated in Tests 1 and 2 above, amending the syntax slightly to suit SQL Server rather than Snowflake (but not changing the join criteria) and then executing in SQL Server via SSMS.

| Test # | Test Description | Query Time | Duplicates |

| 1a | SQL Server syntax SQL from Test 1a above | 1 min 3 secs | Yes |

| 1b | SQL Server syntax SQL from Test 1b above | 1 sec | No |

| 2 | SQL Server syntax SQL from Test 2 above | 1 sec | No |

| 3 | SQL Server syntax SQL from Test 3 above | 1 sec | No |

Power BI against SQL Server Test Results

These tests were carried out in Power BI Desktop against SQL Server.

| Test # | Test Description | Query Time | Duplicates |

| 1 | Power BI joining two tables with nullable columns | 2 secs | Yes |

| 2 | As test 1 filtering but out NULL values from join columns | 5 secs | No |

Key Findings of Tests

The SQL generated by Power BI against Snowflake (see Test 1) when joining nullable columns includes particularly convoluted join criteria. The join is more convoluted than the equivalent join generated by Power BI against SQL Server.

The SQL Server query optimiser also seems to have at least one join additional optimisation that Snowflake doesn’t have (compare the results of Snowflake Test 4 vs. Power BI against SQL Test 1) – however this only works because of the simpler join criteria generated by Power BI against SQL Server.

A great deal of this mess is caused by the fact Power BI insists on matching null values in joins in a way that SQL does not, i.e. null==null is TRUE in a Power BI join but FALSE in a SQL join.

What Changes are Needed in Power BI and Snowflake

Power BI

Change 1

An additional setting (checkbox) in Power BI Desktop in the “Merge Queries” function (i.e. an additional argument in the M Table.NestedJoin() function) that controls whether NULL values match in a join would be greatly beneficial. The checkbox could be named “Match NULL values” (default can be TRUE to match todays behaviour). If the checkbox was unticked, then the SQL generated should revert to the native SQL join types, e.g.

“Match NULL values” ticked (default):

X INNER JOIN Y

ON (X.a = Y.b and X.a IS NOT NULL and Y.b IS NOT NULL) OR (X.a IS NULL AND Y.b IS NULL)See “Change 2” below re: the superfluous SQL that is striked through above.

“Match NULL values” unticked:

X INNER JOIN Y

ON X.a = Y.bThis would solve both of the problems mentioned at the start of this page.

So as an example, we want to be able to generate this SQL against Snowflake:

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1"

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES" as "OTBL"

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on "OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY"

) as "ITBL"

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0Instead of this more convoluted SQL:

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1"

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES" as "OTBL"

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on (("OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY" and not "OTBL"."CUSTOMERALTERNATEKEY" is null) and not "ITBL"."CUSTOMERALTERNATEKEY" is null or "OTBL"."CUSTOMERALTERNATEKEY" is null and "ITBL"."CUSTOMERALTERNATEKEY" is null)

) as "ITBL"

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0Change 2

It would be better if Power BI could generate the same slightly simpler join SQL against Snowflake as it does against SQL Server (i.e. without the SQL striked through above). So even if the checkbox described above were checked to match null values in joins, the SQL generated by Power BI against Snowflake would look like:

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1"

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES" as "OTBL"

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on ("OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY") or ("OTBL"."CUSTOMERALTERNATEKEY" is null and "ITBL"."CUSTOMERALTERNATEKEY" is null)

) as "ITBL"

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0Snowflake

If Power BI were able to generate the simpler SQL Server join SQL against Snowflake, then an optimisation is needed in Snowflake to allow HASH joins to be executed more efficiently for join criteria that looks like the join criteria immediately above, i.e.

X INNER JOIN Y

ON (X.a = Y.b) OR (X.a IS NULL AND Y.b IS NULL)This can equally be expressed in Snowflake as:

X INNER JOIN Y

ON EQUAL_NULL(X, Y)e.g.

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1"

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES" as "OTBL"

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on EQUAL_NULL("OTBL"."CUSTOMERALTERNATEKEY", "ITBL"."CUSTOMERALTERNATEKEY")

) as "ITBL"

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0Power BI against Snowflake Tests

Snowflake Setup

If you wish to create this in your own Snowflake environment, the create table scripts are below:

CREATE TABLE S01_PBI_JOIN_PROBLEM.DimCustomer(

CustomerKey int NOT NULL,

GeographyKey int NULL,

CustomerAlternateKey nvarchar(15) NULL,

Title nvarchar(8) NULL,

FirstName nvarchar(50) NULL,

MiddleName nvarchar(50) NULL,

LastName nvarchar(50) NULL,

NameStyle boolean NULL,

BirthDate date NULL,

MaritalStatus nchar(1) NULL,

Suffix nvarchar(10) NULL,

Gender nvarchar(1) NULL,

EmailAddress nvarchar(50) NULL,

YearlyIncome number NULL,

TotalChildren tinyint NULL,

NumberChildrenAtHome tinyint NULL,

EnglishEducation nvarchar(40) NULL,

SpanishEducation nvarchar(40) NULL,

FrenchEducation nvarchar(40) NULL,

EnglishOccupation nvarchar(100) NULL,

SpanishOccupation nvarchar(100) NULL,

FrenchOccupation nvarchar(100) NULL,

HouseOwnerFlag nchar(1) NULL,

NumberCarsOwned tinyint NULL,

AddressLine1 nvarchar(120) NULL,

AddressLine2 nvarchar(120) NULL,

Phone nvarchar(20) NULL,

DateFirstPurchase date NULL,

CommuteDistance nvarchar(15) NULL

);CREATE TABLE S01_PBI_JOIN_PROBLEM.AlternativeSales(

CustomerAlternateKey nvarchar(15) NULL,

ProductKey int NOT NULL,

OrderDateKey int NOT NULL,

CurrencyKey int NOT NULL,

SalesOrderNumber nvarchar(20) NOT NULL,

SalesOrderLineNumber tinyint NOT NULL,

SalesAmount number(38, 12) NOT NULL

);The tables can be loaded using:

copy into S01_PBI_JOIN_PROBLEM.DimCustomer

from @LOADING.S3_STAGE/01-pbi-joining-problem/DimCustomer.txt.gz

FILE_FORMAT = ( FORMAT_NAME = 'LOADING.GZIP_TSV');

copy into S01_PBI_JOIN_PROBLEM.AlternativeSales

from @LOADING.S3_STAGE/01-pbi-joining-problem/AlternativeSales.txt.gz

FILE_FORMAT = ( FORMAT_NAME = 'LOADING.GZIP_TSV');The file format is defined as:

CREATE OR REPLACE FILE FORMAT LOADING.GZIP_TSV

TYPE = CSV

COMPRESSION = GZIP

FIELD_DELIMITER = '\t'

SKIP_HEADER = 1

BINARY_FORMAT = BASE64;The two data files used can be downloaded from:

http://misc.cbailiss.me.uk/pbijoin/DimCustomer.txt.gz

http://misc.cbailiss.me.uk/pbijoin/AlternativeSales.txt.gz

Test 1 – Joining Nullable Columns

We build two queries in the Power BI Query Builder and join them using “Merge Queries”, specifying the join type as “inner join”.

Query dependencies:

DimCustomer query:

AlternativeSales query (very slow to execute):

Both queries are disabled – neither is set to load into the data model as everything we need to do can be done in the query designer. We are only interested in the AlternativeSales query. This query takes an incredible 9 min 38 sec to execute.

The Power BI PBIX can be downloaded from here.

The results eventually are:

The numbers are hard to read above, but the level of double counting is clear. Instead of 538 million for the first number we now have 222 billion due to the Power BI null matching behaviour.

The SQL generated by Power BI is:

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1"

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES" as "OTBL"

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on (("OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY" and not "OTBL"."CUSTOMERALTERNATEKEY" is null) and not "ITBL"."CUSTOMERALTERNATEKEY" is null or "OTBL"."CUSTOMERALTERNATEKEY" is null and "ITBL"."CUSTOMERALTERNATEKEY" is null)

) as "ITBL"

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0Note the convoluted join condition in the SQL above:

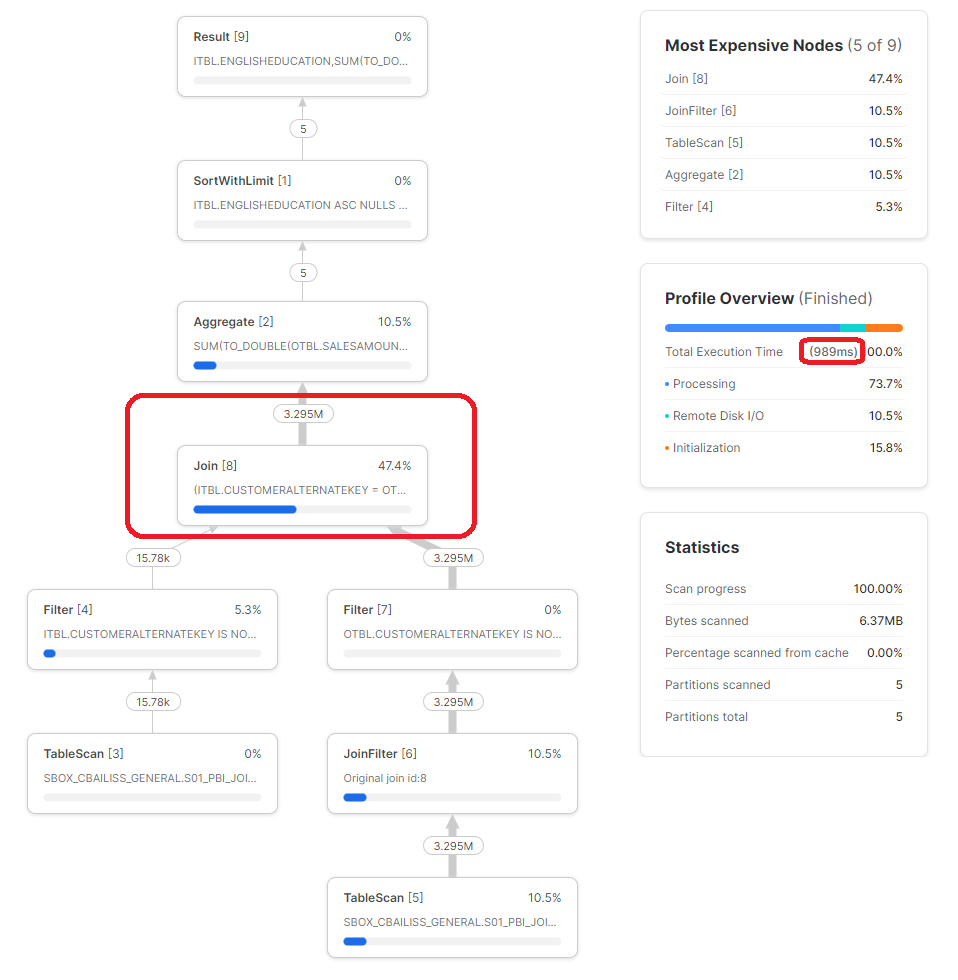

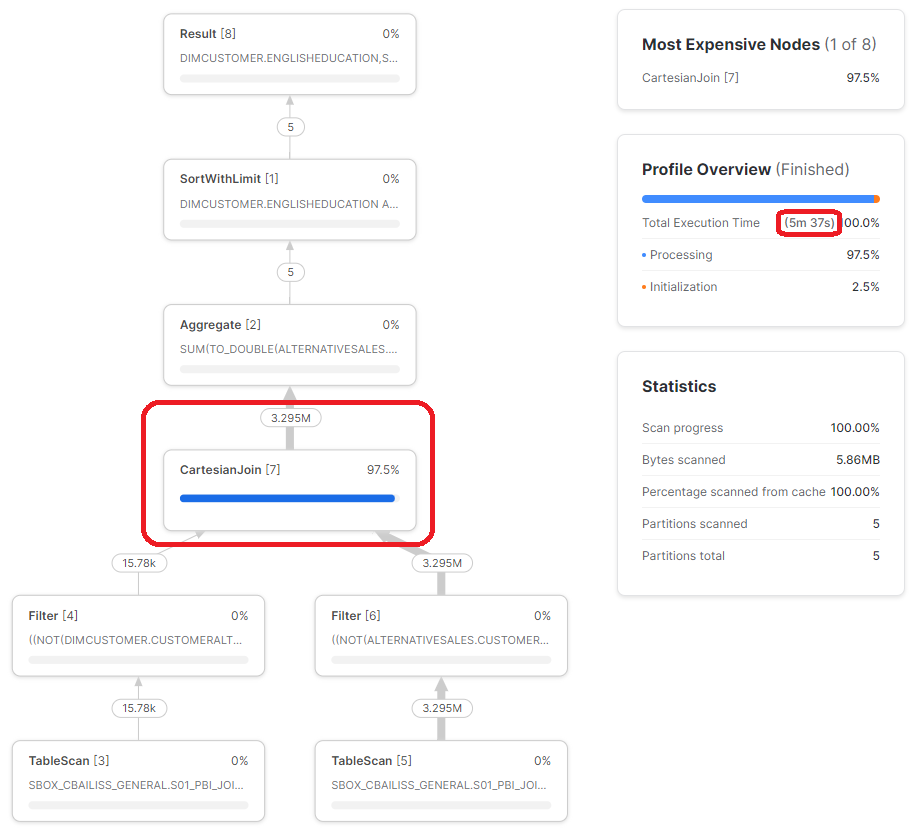

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on (("OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY" and not "OTBL"."CUSTOMERALTERNATEKEY" is null) and not "ITBL"."CUSTOMERALTERNATEKEY" is null or "OTBL"."CUSTOMERALTERNATEKEY" is null and "ITBL"."CUSTOMERALTERNATEKEY" is null)This forces what Snowflake calls a cartesian join in the query plan (which I think is essentially the same as a loop join in SQL Server):

Look at the incredible number of rows (1.5 billion) coming out of the cartesian join!

As a comparison, we can take the SQL generated by Power BI and simplify the join back to a simple inner join. We add in GETDATE() as a simple way of ensuring the query executes and the results do not come from the Snowflake results cache:

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1",

GETDATE()

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES" as "OTBL"

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on "OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY"

)

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0;As expected, this query executes in less than a second, produces the expected values and the query plan looks more sensible:

Test 2 – Joining Filtered Nullable Columns

A variation of the above test was carried out where the two queries in Power BI each had a step added that filtered out NULL CustomerAlternateKey values prior to the “Merge Queries” step.

The Power BI PBIX can be downloaded from here

This query generated the expected results (i.e. not double counting):

Power BI generated the following SQL for Snowflake:

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1"

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from

(

select "CUSTOMERALTERNATEKEY",

"SALESAMOUNT"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES"

where not "CUSTOMERALTERNATEKEY" is null

) as "OTBL"

inner join

(

select "CUSTOMERALTERNATEKEY",

"ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER"

where not "CUSTOMERALTERNATEKEY" is null

) as "ITBL" on (("OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY" and not "OTBL"."CUSTOMERALTERNATEKEY" is null) and not "ITBL"."CUSTOMERALTERNATEKEY" is null or "OTBL"."CUSTOMERALTERNATEKEY" is null and "ITBL"."CUSTOMERALTERNATEKEY" is null)

) as "ITBL"

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0Note the additional sub-queries in the SQL, each containing a WHERE clause to eliminate null values.

This resulted in a query that was a little quicker to execute (5 min 37 secs) but was still ultimately very slow compared to the optimum query. Even though the null values have been eliminated, Snowflake still executed the query with a cartesian join.

Test 3 – Joining Nullable Columns Containing No Null Values

As a quick final test, I deleted the rows from each table containing NULL CustomerAlternateKey values and then executed the original queries from the PBIX file used in Test 1. In this case, the query executed in a couple of seconds. Either Power BI recognised there were no null values present and generated the simpler SQL or Snowflake used data statistics to recognise no null values are present.

While this is an interesting result, it is not really relevant to the main problem – which is how Power BI joins two tables when null values are present.

Back to the Real World

In our internal DW, the tables that we were joining were significantly bigger (20 million rows in each) and the cartesian join resulted in a Snowflake query execution time that was predicted to be four days (the query was stopped after 1 hour). As an interesting aside, at standard Snowflake pay-as-you-go rates, that query would have cost $480 and produced meaningless junk.

As a comparison, when the simple inner join query was executed with our internal DW data, Snowflake was able to complete the query (joining 20 million rows) in a couple of seconds. 4 days vs. a couple of seconds!

SQL Server SQL Tests

To look at the problem from a different perspective, we will now investigate it in SQL Server.

We will approach this in two parts. Firstly, we will execute the SQL we captured above in SSMS against SQL Server, to see the join algorithms that SQL Server is able to use. Then we will build the same test cases in Power BI Desktop that we used against Snowflake above to see how Power BI generates the equivalent SQL for SQL Server.

SQL Server Setup

A SQL Server 2019 database backup file containing test data can be downloaded from here.

Snowflake SQL against SQL Server Test Results

Test 1 – Joining Nullable Columns

The SQL from Snowflake Test 1 above written in SQL Server syntax is:

select TOP 1000 ENGLISHEDUCATION,

sum({ fn convert(SALESAMOUNT, SQL_DOUBLE) }) as C1

from

(

select OTBL.CUSTOMERALTERNATEKEY,

OTBL.SALESAMOUNT,

ITBL.ENGLISHEDUCATION

from PbiJoinProblem.ALTERNATIVESALES as OTBL

inner join PbiJoinProblem.DIMCUSTOMER as ITBL on ((OTBL.CUSTOMERALTERNATEKEY = ITBL.CUSTOMERALTERNATEKEY and not OTBL.CUSTOMERALTERNATEKEY is null) and not ITBL.CUSTOMERALTERNATEKEY is null or OTBL.CUSTOMERALTERNATEKEY is null and ITBL.CUSTOMERALTERNATEKEY is null)

) as ITBL

group by ENGLISHEDUCATION

order by ENGLISHEDUCATIONThe query results include the double counting:

The query executed in 1 min 3 secs using a loop join physical join and possibly (if I am interpreting the query correctly) a hash match to pre-aggregate the data prior to joining – which for this set of data (lots of duplicate rows) works well to reduce the number of rows that need processing by the loop join – but would perhaps be of limited value with real data:

We can execute the simple inner loop version from Test 1:

select TOP 1000 ENGLISHEDUCATION,

sum({ fn convert(SALESAMOUNT, SQL_DOUBLE) }) as C1

from

(

select OTBL.CUSTOMERALTERNATEKEY,

OTBL.SALESAMOUNT,

ITBL.ENGLISHEDUCATION

from PbiJoinProblem.ALTERNATIVESALES as OTBL

inner join PbiJoinProblem.DIMCUSTOMER as ITBL on OTBL.CUSTOMERALTERNATEKEY = ITBL.CUSTOMERALTERNATEKEY

) as ITBL

group by ENGLISHEDUCATION

order by ENGLISHEDUCATIONThis executes in 1 sec and produces the expected results (no double counting):

The query plan included the hash join physical join:

If you try and force a hash join in the first query above by adding the HASH hint, an error results because this join criteria cannot be processed by a hash join:

select TOP 1000 ENGLISHEDUCATION,

sum({ fn convert(SALESAMOUNT, SQL_DOUBLE) }) as C1

from

(

select OTBL.CUSTOMERALTERNATEKEY,

OTBL.SALESAMOUNT,

ITBL.ENGLISHEDUCATION

from PbiJoinProblem.ALTERNATIVESALES as OTBL

inner HASH join PbiJoinProblem.DIMCUSTOMER as ITBL on ((OTBL.CUSTOMERALTERNATEKEY = ITBL.CUSTOMERALTERNATEKEY and not OTBL.CUSTOMERALTERNATEKEY is null) and not ITBL.CUSTOMERALTERNATEKEY is null or OTBL.CUSTOMERALTERNATEKEY is null and ITBL.CUSTOMERALTERNATEKEY is null)

) as ITBL

group by ENGLISHEDUCATION

order by ENGLISHEDUCATIONError:

Msg 8622, Level 16, State 1, Line 1

Query processor could not produce a query plan because of the hints defined in this query. Resubmit the query without specifying any hints and without using SET FORCEPLAN.This is the essence of the performance problem caused by the SQL generated by Power BI when joining nullable columns – the most common/efficient physical join algorithm is unable to process the join criteria.

Test 2 – Joining Filtered Nullable Columns

The SQL from Snowflake Test 2 above written in SQL Server syntax is:

select TOP 1000 ENGLISHEDUCATION,

sum({ fn convert(SALESAMOUNT, SQL_DOUBLE) }) as C1

from

(

select OTBL.CUSTOMERALTERNATEKEY,

OTBL.SALESAMOUNT,

ITBL.ENGLISHEDUCATION

from

(

select CUSTOMERALTERNATEKEY,

SALESAMOUNT

from PbiJoinProblem.ALTERNATIVESALES

where not CUSTOMERALTERNATEKEY is null

) as OTBL

inner join

(

select CUSTOMERALTERNATEKEY,

ENGLISHEDUCATION

from PbiJoinProblem.DIMCUSTOMER

where not CUSTOMERALTERNATEKEY is null

) as ITBL on ((OTBL.CUSTOMERALTERNATEKEY = ITBL.CUSTOMERALTERNATEKEY and not OTBL.CUSTOMERALTERNATEKEY is null) and not ITBL.CUSTOMERALTERNATEKEY is null or OTBL.CUSTOMERALTERNATEKEY is null and ITBL.CUSTOMERALTERNATEKEY is null)

) as ITBL

group by ENGLISHEDUCATION

order by ENGLISHEDUCATIONThe query results correctly do not include the double counting:

The query executes in around 1 sec, i.e. SQL Server is able to use the more optimal HASH join algorithm:

Test 3 – Joining Nullable Columns Containing No Null Values

If we delete the rows containing null CustomerAlternateKey values in both tables, and try executing the first SQL query for SQL Server Test 1 above, we get the same SQL Server behaviour – i.e. SQL Server appears not to use statistics to determine whether null values actually exist in order to use a HASH join.

Power BI against SQL Server Tests

Power BI often does not generate the same SQL against different data sources. So, let’s build the same queries in Power BI Desktop against SQL, including the “Merge Queries” action and look at the SQL that is generated.

Test 1 – Joining Nullable Columns

Attempting to build the queries in Power BI Desktop is painful. When adding the “Merge Queries” step, the Query Builder gets stuck reading data incredibly slowly:

It appears to be attempting to perform the join in Power BI instead of using SQL Server to perform the join.

For the PBIX file at this point, see SQL Server Example 1 from here.

To work around this problem I temporarily added a “Keep Top 1000” step before the “Merge Queries” step. This allowed me to build the rest of the query. I could then remove the “Keep Top 1000” step however if I accidentally clicked on the “Merged Queries” step, Power BI Desktop would again try to download all of the data (this is painful to work with!).

For the PBIX file at this point, see SQL Server Example 2 from here.

The final query was now able to execute:

As we might assume by now, the results include the double counting:

The SQL generated was:

select top 1000

[_].[EnglishEducation],

[_].[SalesAmount]

from

(

select [rows].[EnglishEducation] as [EnglishEducation],

sum([rows].[SalesAmount]) as [SalesAmount]

from

(

select [$Outer].[CustomerAlternateKey],

[$Outer].[SalesAmount],

[$Inner].[EnglishEducation]

from

(

select [CustomerAlternateKey],

[SalesAmount]

from [PbiJoinProblem].[AlternativeSales] as [$Table]

) as [$Outer]

inner join

(

select [CustomerAlternateKey] as [CustomerAlternateKey2],

[EnglishEducation] as [EnglishEducation]

from [PbiJoinProblem].[DimCustomer] as [$Table]

) as [$Inner] on ([$Outer].[CustomerAlternateKey] = [$Inner].[CustomerAlternateKey2] or [$Outer].[CustomerAlternateKey] is null and [$Inner].[CustomerAlternateKey2] is null)

) as [rows]

group by [EnglishEducation]

) as [_]

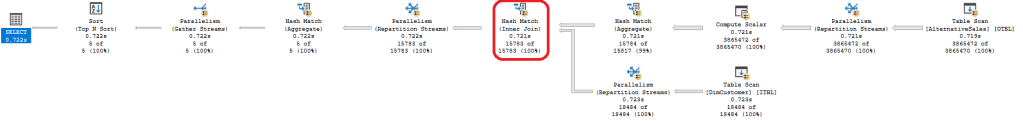

order by [_].[EnglishEducation]Interestingly, even given the slightly non-trivial join condition, the query executed in only a couple of seconds. The query plan was:

The above is interesting, it shows that Power BI is generating a simple SQL query for SQL Server than it does for Snowflake. This query can fully execute using the HASH join physical join. We will test this version of the query against Snowflake later further below on this page (see Test 4 section further below).

Test 2 – Joining Filtered Nullable Columns

For completeness, we’ll filter the two tables in Power BI to exclude NULL CustomerAlternateKey values before the merge join.

For the PBIX file at this point, see SQL Server Example 3 from here.

The results of this in Power BI, as expected, do not include the double counting:

The SQL generated by Power BI was:

select top 1000

[_].[EnglishEducation],

[_].[SalesAmount]

from

(

select [rows].[EnglishEducation] as [EnglishEducation],

sum([rows].[SalesAmount]) as [SalesAmount]

from

(

select [$Outer].[CustomerAlternateKey],

[$Outer].[SalesAmount],

[$Inner].[EnglishEducation]

from

(

select [_].[CustomerAlternateKey],

[_].[SalesAmount]

from

(

select [CustomerAlternateKey],

[SalesAmount]

from [PbiJoinProblem].[AlternativeSales] as [$Table]

) as [_]

where [_].[CustomerAlternateKey] is not null

) as [$Outer]

inner join

(

select [_].[CustomerAlternateKey] as [CustomerAlternateKey2],

[_].[EnglishEducation] as [EnglishEducation]

from

(

select [CustomerAlternateKey],

[EnglishEducation]

from [PbiJoinProblem].[DimCustomer] as [$Table]

) as [_]

where [_].[CustomerAlternateKey] is not null

) as [$Inner] on ([$Outer].[CustomerAlternateKey] = [$Inner].[CustomerAlternateKey2] or [$Outer].[CustomerAlternateKey] is null and [$Inner].[CustomerAlternateKey2] is null)

) as [rows]

group by [EnglishEducation]

) as [_]

order by [_].[EnglishEducation]This features IS NOT NULL WHERE clauses as expected and still features the simpler join criteria than was generated against Snowflake. The query executed in around 5 seconds using the following query plan:

Revisiting Snowflake

Test 4 – Power BI SQL Server Query executed against Snowflake

We take the Test 1 query generated by Power BI against SQL Server and test executing this against Snowflake. The SQL is:

select "ENGLISHEDUCATION",

sum({ fn convert("SALESAMOUNT", SQL_DOUBLE) }) as "C1"

from

(

select "OTBL"."CUSTOMERALTERNATEKEY",

"OTBL"."SALESAMOUNT",

"ITBL"."ENGLISHEDUCATION"

from "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."ALTERNATIVESALES" as "OTBL"

inner join "SBOX_CBAILISS_GENERAL"."S01_PBI_JOIN_PROBLEM"."DIMCUSTOMER" as "ITBL" on (("OTBL"."CUSTOMERALTERNATEKEY" = "ITBL"."CUSTOMERALTERNATEKEY") or "OTBL"."CUSTOMERALTERNATEKEY" is null and "ITBL"."CUSTOMERALTERNATEKEY" is null)

) as "ITBL"

group by "ENGLISHEDUCATION"

order by "ENGLISHEDUCATION"

LIMIT 1000 OFFSET 0As you might assume, the results include the double counting:

The query execution time was significantly improved at 54 seconds (instead of 9 minutes plus), but this is still much slower than the simple join execution time in Snowflake (less than 1 second). The query plan doesn’t feature the cartesian join but still features an ugly large number of rows after the join:

Appendix: SQL Server Data Generation Process

Download and Restore AdventureWorksDW

Download the AdventureWorksDW database from here. Note – ensure you download the DW version, not the OLTP version. For the record, I ran the code on this page using SQL Server 2019.

Restore the database into your SQL Server.

Prepare AdventureWorksDW

The following script created the test tables/data:

CREATE SCHEMA PbiJoinProblem;

CREATE TABLE PbiJoinProblem.[DimCustomer](

[CustomerKey] [int] NOT NULL,

[GeographyKey] [int] NULL,

[CustomerAlternateKey] [nvarchar](15) NULL,

[Title] [nvarchar](8) NULL,

[FirstName] [nvarchar](50) NULL,

[MiddleName] [nvarchar](50) NULL,

[LastName] [nvarchar](50) NULL,

[NameStyle] [bit] NULL,

[BirthDate] [date] NULL,

[MaritalStatus] [nchar](1) NULL,

[Suffix] [nvarchar](10) NULL,

[Gender] [nvarchar](1) NULL,

[EmailAddress] [nvarchar](50) NULL,

[YearlyIncome] [money] NULL,

[TotalChildren] [tinyint] NULL,

[NumberChildrenAtHome] [tinyint] NULL,

[EnglishEducation] [nvarchar](40) NULL,

[SpanishEducation] [nvarchar](40) NULL,

[FrenchEducation] [nvarchar](40) NULL,

[EnglishOccupation] [nvarchar](100) NULL,

[SpanishOccupation] [nvarchar](100) NULL,

[FrenchOccupation] [nvarchar](100) NULL,

[HouseOwnerFlag] [nchar](1) NULL,

[NumberCarsOwned] [tinyint] NULL,

[AddressLine1] [nvarchar](120) NULL,

[AddressLine2] [nvarchar](120) NULL,

[Phone] [nvarchar](20) NULL,

[DateFirstPurchase] [date] NULL,

[CommuteDistance] [nvarchar](15) NULL

);

INSERT INTO PbiJoinProblem.DimCustomer

SELECT [CustomerKey]

,[GeographyKey]

,CASE WHEN ABS(CHECKSUM(NewId())) % 14 > 11 THEN NULL ELSE [CustomerAlternateKey] END

,[Title]

,[FirstName]

,[MiddleName]

,[LastName]

,[NameStyle]

,[BirthDate]

,[MaritalStatus]

,[Suffix]

,[Gender]

,[EmailAddress]

,[YearlyIncome]

,[TotalChildren]

,[NumberChildrenAtHome]

,[EnglishEducation]

,[SpanishEducation]

,[FrenchEducation]

,[EnglishOccupation]

,[SpanishOccupation]

,[FrenchOccupation]

,[HouseOwnerFlag]

,[NumberCarsOwned]

,[AddressLine1]

,[AddressLine2]

,[Phone]

,[DateFirstPurchase]

,[CommuteDistance]

FROM dbo.DimCustomer;

CREATE TABLE PbiJoinProblem.AlternativeSales

(

[CustomerAlternateKey] [nvarchar](15) NULL,

[ProductKey] [int] NOT NULL,

[OrderDateKey] [int] NOT NULL,

[CurrencyKey] [int] NOT NULL,

[SalesOrderNumber] [nvarchar](20) NOT NULL,

[SalesOrderLineNumber] [tinyint] NOT NULL,

[SalesAmount] [money] NOT NULL

);

ALTER TABLE PbiJoinProblem.AlternativeSales REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

INSERT INTO PbiJoinProblem.AlternativeSales

SELECT dc.CustomerAlternateKey, f.ProductKey, f.OrderDateKey, f.CurrencyKey,

substring(CAST(newid() as varchar(50)),1,20), f.SalesOrderLineNumber, f.SalesAmount

FROM dbo.FactInternetSales f

INNER JOIN PbiJoinProblem.DimCustomer dc

ON f.CustomerKey = dc.CustomerKey;

-- run the following repeatedly (5-6 times to generate a few million rows in AlternativeSales table)

INSERT INTO PbiJoinProblem.AlternativeSales

SELECT CustomerAlternateKey, ProductKey, OrderDateKey, CurrencyKey,

substring(CAST(newid() as varchar(50)),1,20), SalesOrderLineNumber, SalesAmount

FROM PbiJoinProblem.AlternativeSalesAll tables other than the two tables created above were then dropped from the database.